- Privacy Policy

Home » Secondary Data – Types, Methods and Examples

Secondary Data – Types, Methods and Examples

Table of Contents

Secondary data is a critical resource in research, providing a cost-effective and time-efficient means to gather insights and conduct analyses. It involves the use of pre-existing information collected for purposes other than the researcher’s current study. Understanding secondary data, its types, methods, and practical applications can enhance the quality and efficiency of research projects.

Secondary Data

Secondary data refers to information that has been collected, organized, and published by others for purposes other than the researcher’s current investigation. It is often derived from previously conducted studies, reports, surveys, or administrative records. Secondary data serves as an essential foundation for exploratory studies, comparative analysis, and literature reviews.

For example, a market researcher analyzing consumer behavior trends might use sales reports, census data, or prior survey results as secondary data.

Importance of Secondary Data

Secondary data plays a vital role in research due to the following benefits:

- Cost and Time Efficiency: It eliminates the need for primary data collection, saving resources.

- Availability of Longitudinal Data: Historical datasets allow researchers to analyze trends over time.

- Broad Accessibility: Secondary data is often publicly available or accessible through organizations and libraries.

- Supports Comparative Studies: By leveraging data from multiple sources, researchers can draw comparisons and generalizations.

Types of Secondary Data

1. quantitative data.

Quantitative secondary data includes numerical information such as statistics, survey results, or financial records. Examples include census data, sales figures, and stock market indices.

2. Qualitative Data

Qualitative secondary data comprises non-numerical information such as written reports, interviews, or visual media. Examples include journal articles, company reports, and archived videos.

3. Internal Data

Internal secondary data refers to data collected and stored within an organization. Examples include:

- Sales records

- Employee performance evaluations

- Customer feedback reports

4. External Data

External secondary data is obtained from outside sources and includes information from:

- Government publications

- Academic research papers

- News articles

- Market research reports

Sources of Secondary Data

Secondary data is obtained from a variety of sources:

1. Published Sources

- Books, journals, and conference papers.

- Government reports, such as census and economic surveys.

- Online databases like Google Scholar or JSTOR.

2. Unpublished Sources

- Internal company documents.

- University theses and dissertations.

- Private records from organizations or individuals.

3. Online Sources

- Websites, blogs, and online articles.

- Publicly accessible data repositories.

- Social media platforms for sentiment and behavior analysis.

Methods of Collecting Secondary Data

1. archival research.

Archival research involves exploring historical records, legal documents, or organizational archives to extract data relevant to the study.

2. Literature Review

Researchers systematically review books, articles, and reports to gather secondary data that supports the research’s theoretical framework.

3. Content Analysis

Content analysis involves studying qualitative data such as text, images, or videos to extract meaningful patterns and themes.

4. Meta-Analysis

A meta-analysis aggregates and synthesizes quantitative data from multiple studies to derive broader conclusions.

Advantages of Secondary Data

- Saves Time and Resources: Researchers can access ready-made data without spending time on data collection.

- Provides Historical Context: Enables analysis of trends and changes over time using historical datasets.

- Wide Range of Information: Secondary data sources cover various topics and fields, offering a broader scope.

- Supports Hypothesis Testing: Existing data can validate or refute hypotheses without additional data collection.

Disadvantages of Secondary Data

- Lack of Specificity: Secondary data may not align perfectly with the researcher’s specific needs or objectives.

- Data Quality Issues: The accuracy, reliability, and validity of secondary data may be questionable if not verified.

- Outdated Information: Some secondary data might be obsolete, reducing its relevance for current research.

- Limited Control Over Data Collection: Researchers have no influence over how the data was originally collected.

Examples of Secondary Data in Research

Example 1: market research.

A company evaluating potential new markets might use government census data and reports from market research firms to understand population demographics and consumer preferences.

Example 2: Academic Research

A researcher studying climate change might rely on previously published articles, datasets from meteorological agencies, and satellite images available in public repositories.

Example 3: Business Analysis

An entrepreneur assessing competition in an industry might use financial reports, industry surveys, and media articles about competitors.

Example 4: Healthcare Studies

A medical researcher analyzing the spread of diseases might use hospital records, public health reports, and prior epidemiological studies.

How to Evaluate the Quality of Secondary Data

To ensure reliability and validity, researchers must critically evaluate secondary data by considering:

- Source Credibility: Is the data sourced from a reputable organization, institution, or publication?

- Data Relevance: Does the data align with the research objectives?

- Timeliness: Is the data up-to-date and relevant to current conditions?

- Accuracy: Was the data collected using rigorous and transparent methods?

- Bias Check: Is there evidence of bias in the way the data was collected or reported?

Applications of Secondary Data

- Academic Research: Secondary data supports literature reviews, theoretical studies, and meta-analyses.

- Business Intelligence: Companies use it to analyze market trends, customer behavior, and competitor performance.

- Policy Making: Governments rely on secondary data to design policies, allocate resources, and assess social programs.

- Healthcare Studies: Researchers use existing patient data and medical reports to identify health trends and evaluate interventions.

Secondary data is a valuable resource for researchers, offering an efficient way to gather insights without conducting primary data collection. By understanding the types, sources, and methods of obtaining secondary data, researchers can leverage it effectively to answer their research questions and support their objectives. However, careful evaluation of secondary data is crucial to ensure its quality, relevance, and reliability. With proper application, secondary data can enhance the depth and breadth of research across disciplines.

- Bryman, A. (2016). Social Research Methods (5th ed.). Oxford University Press.

- Creswell, J. W., & Creswell, J. D. (2018). Research Design: Qualitative, Quantitative, and Mixed Methods Approaches (5th ed.). SAGE Publications.

- Kothari, C. R. (2004). Research Methodology: Methods and Techniques . New Age International.

- United Nations Statistics Division. (n.d.). Sources and Methods of Secondary Data. Retrieved from https://unstats.un.org

- Saunders, M., Lewis, P., & Thornhill, A. (2019). Research Methods for Business Students (8th ed.). Pearson.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Research Data – Types Methods and Examples

Quantitative Data – Types, Methods and Examples

Qualitative Data – Types, Methods and Examples

Primary Data – Types, Methods and Examples

Information in Research – Types and Examples

A Guide To Secondary Data Analysis

What is secondary data analysis? How do you carry it out? Find out in this post.

Historically, the only way data analysts could obtain data was to collect it themselves. This type of data is often referred to as primary data and is still a vital resource for data analysts.

However, technological advances over the last few decades mean that much past data is now readily available online for data analysts and researchers to access and utilize. This type of data—known as secondary data—is driving a revolution in data analytics and data science.

Primary and secondary data share many characteristics. However, there are some fundamental differences in how you prepare and analyze secondary data. This post explores the unique aspects of secondary data analysis. We’ll briefly review what secondary data is before outlining how to source, collect and validate them. We’ll cover:

- What is secondary data analysis?

- How to carry out secondary data analysis (5 steps)

- Summary and further reading

Ready for a crash course in secondary data analysis? Let’s go!

1. What is secondary data analysis?

Secondary data analysis uses data collected by somebody else. This contrasts with primary data analysis, which involves a researcher collecting predefined data to answer a specific question. Secondary data analysis has numerous benefits, not least that it is a time and cost-effective way of obtaining data without doing the research yourself.

It’s worth noting here that secondary data may be primary data for the original researcher. It only becomes secondary data when it’s repurposed for a new task. As a result, a dataset can simultaneously be a primary data source for one researcher and a secondary data source for another. So don’t panic if you get confused! We explain exactly what secondary data is in this guide .

In reality, the statistical techniques used to carry out secondary data analysis are no different from those used to analyze other kinds of data. The main differences lie in collection and preparation. Once the data have been reviewed and prepared, the analytics process continues more or less as it usually does. For a recap on what the data analysis process involves, read this post .

In the following sections, we’ll focus specifically on the preparation of secondary data for analysis. Where appropriate, we’ll refer to primary data analysis for comparison.

2. How to carry out secondary data analysis

Step 1: define a research topic.

The first step in any data analytics project is defining your goal. This is true regardless of the data you’re working with, or the type of analysis you want to carry out. In data analytics lingo, this typically involves defining:

- A statement of purpose

- Research design

Defining a statement of purpose and a research approach are both fundamental building blocks for any project. However, for secondary data analysis, the process of defining these differs slightly. Let’s find out how.

Step 2: Establish your statement of purpose

Before beginning any data analytics project, you should always have a clearly defined intent. This is called a ‘statement of purpose.’ A healthcare analyst’s statement of purpose, for example, might be: ‘Reduce admissions for mental health issues relating to Covid-19′. The more specific the statement of purpose, the easier it is to determine which data to collect, analyze, and draw insights from.

A statement of purpose is helpful for both primary and secondary data analysis. It’s especially relevant for secondary data analysis, though. This is because there are vast amounts of secondary data available. Having a clear direction will keep you focused on the task at hand, saving you from becoming overwhelmed. Being selective with your data sources is key.

Step 3: Design your research process

After defining your statement of purpose, the next step is to design the research process. For primary data, this involves determining the types of data you want to collect (e.g. quantitative, qualitative, or both ) and a methodology for gathering them.

For secondary data analysis, however, your research process will more likely be a step-by-step guide outlining the types of data you require and a list of potential sources for gathering them. It may also include (realistic) expectations of the output of the final analysis. This should be based on a preliminary review of the data sources and their quality.

Once you have both your statement of purpose and research design, you’re in a far better position to narrow down potential sources of secondary data. You can then start with the next step of the process: data collection.

Step 4: Locate and collect your secondary data

Collecting primary data involves devising and executing a complex strategy that can be very time-consuming to manage. The data you collect, though, will be highly relevant to your research problem.

Secondary data collection, meanwhile, avoids the complexity of defining a research methodology. However, it comes with additional challenges. One of these is identifying where to find the data. This is no small task because there are a great many repositories of secondary data available. Your job, then, is to narrow down potential sources. As already mentioned, it’s necessary to be selective, or else you risk becoming overloaded.

Some popular sources of secondary data include:

- Government statistics , e.g. demographic data, censuses, or surveys, collected by government agencies/departments (like the US Bureau of Labor Statistics).

- Technical reports summarizing completed or ongoing research from educational or public institutions (colleges or government).

- Scientific journals that outline research methodologies and data analysis by experts in fields like the sciences, medicine, etc.

- Literature reviews of research articles, books, and reports, for a given area of study (once again, carried out by experts in the field).

- Trade/industry publications , e.g. articles and data shared in trade publications, covering topics relating to specific industry sectors, such as tech or manufacturing.

- Online resources: Repositories, databases, and other reference libraries with public or paid access to secondary data sources.

Once you’ve identified appropriate sources, you can go about collecting the necessary data. This may involve contacting other researchers, paying a fee to an organization in exchange for a dataset, or simply downloading a dataset for free online .

Step 5: Evaluate your secondary data

Secondary data is usually well-structured, so you might assume that once you have your hands on a dataset, you’re ready to dive in with a detailed analysis. Unfortunately, that’s not the case!

First, you must carry out a careful review of the data. Why? To ensure that they’re appropriate for your needs. This involves two main tasks:

Evaluating the secondary dataset’s relevance

- Assessing its broader credibility

Both these tasks require critical thinking skills. However, they aren’t heavily technical. This means anybody can learn to carry them out.

Let’s now take a look at each in a bit more detail.

The main point of evaluating a secondary dataset is to see if it is suitable for your needs. This involves asking some probing questions about the data, including:

What was the data’s original purpose?

Understanding why the data were originally collected will tell you a lot about their suitability for your current project. For instance, was the project carried out by a government agency or a private company for marketing purposes? The answer may provide useful information about the population sample, the data demographics, and even the wording of specific survey questions. All this can help you determine if the data are right for you, or if they are biased in any way.

When and where were the data collected?

Over time, populations and demographics change. Identifying when the data were first collected can provide invaluable insights. For instance, a dataset that initially seems suited to your needs may be out of date.

On the flip side, you might want past data so you can draw a comparison with a present dataset. In this case, you’ll need to ensure the data were collected during the appropriate time frame. It’s worth mentioning that secondary data are the sole source of past data. You cannot collect historical data using primary data collection techniques.

Similarly, you should ask where the data were collected. Do they represent the geographical region you require? Does geography even have an impact on the problem you are trying to solve?

What data were collected and how?

A final report for past data analytics is great for summarizing key characteristics or findings. However, if you’re planning to use those data for a new project, you’ll need the original documentation. At the very least, this should include access to the raw data and an outline of the methodology used to gather them. This can be helpful for many reasons. For instance, you may find raw data that wasn’t relevant to the original analysis, but which might benefit your current task.

What questions were participants asked?

We’ve already touched on this, but the wording of survey questions—especially for qualitative datasets—is significant. Questions may deliberately be phrased to preclude certain answers. A question’s context may also impact the findings in a way that’s not immediately obvious. Understanding these issues will shape how you perceive the data.

What is the form/shape/structure of the data?

Finally, to practical issues. Is the structure of the data suitable for your needs? Is it compatible with other sources or with your preferred analytics approach? This is purely a structural issue. For instance, if a dataset of people’s ages is saved as numerical rather than continuous variables, this could potentially impact your analysis. In general, reviewing a dataset’s structure helps better understand how they are categorized, allowing you to account for any discrepancies. You may also need to tidy the data to ensure they are consistent with any other sources you’re using.

This is just a sample of the types of questions you need to consider when reviewing a secondary data source. The answers will have a clear impact on whether the dataset—no matter how well presented or structured it seems—is suitable for your needs.

Assessing secondary data’s credibility

After identifying a potentially suitable dataset, you must double-check the credibility of the data. Namely, are the data accurate and unbiased? To figure this out, here are some key questions you might want to include:

What are the credentials of those who carried out the original research?

Do you have access to the details of the original researchers? What are their credentials? Where did they study? Are they an expert in the field or a newcomer? Data collection by an undergraduate student, for example, may not be as rigorous as that of a seasoned professor.

And did the original researcher work for a reputable organization? What other affiliations do they have? For instance, if a researcher who works for a tobacco company gathers data on the effects of vaping, this represents an obvious conflict of interest! Questions like this help determine how thorough or qualified the researchers are and if they have any potential biases.

Do you have access to the full methodology?

Does the dataset include a clear methodology, explaining in detail how the data were collected? This should be more than a simple overview; it must be a clear breakdown of the process, including justifications for the approach taken. This allows you to determine if the methodology was sound. If you find flaws (or no methodology at all) it throws the quality of the data into question.

How consistent are the data with other sources?

Do the secondary data match with any similar findings? If not, that doesn’t necessarily mean the data are wrong, but it does warrant closer inspection. Perhaps the collection methodology differed between sources, or maybe the data were analyzed using different statistical techniques. Or perhaps unaccounted-for outliers are skewing the analysis. Identifying all these potential problems is essential. A flawed or biased dataset can still be useful but only if you know where its shortcomings lie.

Have the data been published in any credible research journals?

Finally, have the data been used in well-known studies or published in any journals? If so, how reputable are the journals? In general, you can judge a dataset’s quality based on where it has been published. If in doubt, check out the publication in question on the Directory of Open Access Journals . The directory has a rigorous vetting process, only permitting journals of the highest quality. Meanwhile, if you found the data via a blurry image on social media without cited sources, then you can justifiably question its quality!

Again, these are just a few of the questions you might ask when determining the quality of a secondary dataset. Consider them as scaffolding for cultivating a critical thinking mindset; a necessary trait for any data analyst!

Presuming your secondary data holds up to scrutiny, you should be ready to carry out your detailed statistical analysis. As we explained at the beginning of this post, the analytical techniques used for secondary data analysis are no different than those for any other kind of data. Rather than go into detail here, check out the different types of data analysis in this post.

3. Secondary data analysis: Key takeaways

In this post, we’ve looked at the nuances of secondary data analysis, including how to source, collect and review secondary data. As discussed, much of the process is the same as it is for primary data analysis. The main difference lies in how secondary data are prepared.

Carrying out a meaningful secondary data analysis involves spending time and effort exploring, collecting, and reviewing the original data. This will help you determine whether the data are suitable for your needs and if they are of good quality.

Why not get to know more about what data analytics involves with this free, five-day introductory data analytics short course ? And, for more data insights, check out these posts:

- Discrete vs continuous data variables: What’s the difference?

- What are the four levels of measurement? Nominal, ordinal, interval, and ratio data explained

- What are the best tools for data mining?

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

The Roles of a Secondary Data Analytics Tool and Experience in Scientific Hypothesis Generation in Clinical Research: Protocol for a Mixed Methods Study

Xia jing , md, phd, vimla l patel , phd, james j cimino , md, jay h shubrook , do, yuchun zhou , phd, chang liu , phd, sonsoles de lacalle , md, phd.

- Author information

- Article notes

- Copyright and License information

Corresponding Author: Xia Jing [email protected]

Corresponding author.

Received 2022 May 9; Revision requested 2022 Jun 1; Revised 2022 Jun 18; Accepted 2022 Jun 21; Collection date 2022 Jul.

This is an open-access article distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work, first published in JMIR Research Protocols, is properly cited. The complete bibliographic information, a link to the original publication on https://www.researchprotocols.org , as well as this copyright and license information must be included.

Scientific hypothesis generation is a critical step in scientific research that determines the direction and impact of any investigation. Despite its vital role, we have limited knowledge of the process itself, thus hindering our ability to address some critical questions.

This study aims to answer the following questions: To what extent can secondary data analytics tools facilitate the generation of scientific hypotheses during clinical research? Are the processes similar in developing clinical diagnoses during clinical practice and developing scientific hypotheses for clinical research projects? Furthermore, this study explores the process of scientific hypothesis generation in the context of clinical research. It was designed to compare the role of VIADS, a visual interactive analysis tool for filtering and summarizing large data sets coded with hierarchical terminologies, and the experience levels of study participants during the scientific hypothesis generation process.

This manuscript introduces a study design. Experienced and inexperienced clinical researchers are being recruited since July 2021 to take part in this 2×2 factorial study, in which all participants use the same data sets during scientific hypothesis–generation sessions and follow predetermined scripts. The clinical researchers are separated into experienced or inexperienced groups based on predetermined criteria and are then randomly assigned into groups that use and do not use VIADS via block randomization. The study sessions, screen activities, and audio recordings of participants are captured. Participants use the think-aloud protocol during the study sessions. After each study session, every participant is given a follow-up survey, with participants using VIADS completing an additional modified System Usability Scale survey. A panel of clinical research experts will assess the scientific hypotheses generated by participants based on predeveloped metrics. All data will be anonymized, transcribed, aggregated, and analyzed.

Data collection for this study began in July 2021. Recruitment uses a brief online survey. The preliminary results showed that study participants can generate a few to over a dozen scientific hypotheses during a 2-hour study session, regardless of whether they used VIADS or other analytics tools. A metric to more accurately, comprehensively, and consistently assess scientific hypotheses within a clinical research context has been developed.

Conclusions

The scientific hypothesis–generation process is an advanced cognitive activity and a complex process. Our results so far show that clinical researchers can quickly generate initial scientific hypotheses based on data sets and prior experience. However, refining these scientific hypotheses is a much more time-consuming activity. To uncover the fundamental mechanisms underlying the generation of scientific hypotheses, we need breakthroughs that can capture thinking processes more precisely.

International Registered Report Identifier (IRRID)

DERR1-10.2196/39414

Keywords: clinical research, observational study, scientific hypothesis generation, secondary data analytics tool, think-aloud method

Introduction

A hypothesis is an educated guess or statement about the relationship between 2 or more variables [ 1 , 2 ]. Scientific hypothesis generation is a critical step in scientific research that determines the direction and impact of research investigations. However, despite its vital role, we do not know the answers to some basic questions about the generation process. Some examples are as follows: “Can secondary data analytics tools facilitate the process?” and “Is the scientific hypothesis generation process for clinical research questions similar to differential diagnosis questions?” Traditionally, the scientific method involves delineating a research question and generating a scientific hypothesis. After formulating a scientific hypothesis, researchers design studies to test the scientific hypothesis to determine the answers to research questions [ 1 , 3 ].

Scientific hypothesis generation and scientific hypothesis testing are distinct processes [ 1 , 4 ]. In clinical research, research questions are often delineated without the support of systematic data analysis and are not data driven [ 1 , 5 , 6 ]. Using and analyzing existing data to facilitate scientific hypothesis generation is considered ecological research [ 7 , 8 ]. An ever-increasing amount of electronic health care data is becoming available, much of which is coded. These data can be a rich source for secondary data analysis, accelerating scientific discoveries [ 9 ]. Thus, many researchers have been exploring data-driven scientific hypothesis generation guided by secondary data analysis [ 1 , 10 ]. This includes various fields, including genomics [ 4 ]. However, exactly how a scientific hypothesis is generated, even as shown by secondary data analysis in clinical research, is unknown. Understanding the detailed process of scientific hypothesis generation could improve the efficiency of delineating clinical research questions and, consequently, clinical research. Therefore, this study investigates the process of formulating scientific hypotheses guided by secondary data analysis. Using these results as a baseline, we plan to explore ways of supporting and improving the scientific hypothesis–generation process and to study the process of formulating research questions as long-term goals.

Electronic health record systems and related technologies have been widely adopted in both office-based physician practices (86% in 2019) [ 11 ] and hospitals (overall 86% in 2022), and types vary based on hospital types [ 12 ] across the United States. Thus, vast amounts of electronic data are continuously captured and available for analysis to guide future decisions, uncover new patterns, or identify new paradigms in medicine. Much of the data is coded using hierarchical terminologies, and some of these commonly used terminologies include the International Classification of Diseases, 9th Revision-Clinical Modification (ICD9-CM) [ 13 ] and 10th Revision-Clinical Modification (ICD10-CM) [ 14 ], Systematized Nomenclature of Medicine-Clinical Terms (SNOMED-CT) [ 15 ], Logical Observation Identifiers Names and Codes (LOINC) [ 16 ], RxNorm [ 17 ], Gene Ontology [ 18 ], and Medical Subject Headings (MeSH) [ 19 ]. We used the coded data sets by hierarchical terminologies as examples of existing data sets to facilitate and articulate the scientific hypothesis–generation process in clinical research, especially when guided by secondary data analyses. Algorithms [ 20 , 21 ] and a web-based secondary data analytics tool [ 22 - 25 ] were developed to use the coded electronic data (ICD9 or MeSH) in order to conduct population studies and other clinically relevant studies.

Arocha et al and Patel et al [ 26 , 27 ] studied the directionality of reasoning in scientific hypothesis–generation processes and evaluation strategies (of confirmation or disconfirmation) for solving a cardiovascular diagnostic problem by medical students (novice) and medical residents (experienced). The reasoning directions include forward (from evidence to a scientific hypothesis) and backward (from a scientific hypothesis to evidence). More experienced clinicians used their own underlying situational knowledge about the clinical condition, while the novices used the surface structure of the patient information during the diagnosis generation process. The studies by Patel et al [ 28 , 29 ] and Kushniruk et al [ 30 ] used inexperienced and experienced clinicians with different roles, levels of medical expertise, and corresponding strategies to diagnose an endocrine disorder. In these studies, expert physicians used more efficient strategies (integrating patient history and experts’ prior knowledge) to make diagnostic decisions [ 28 , 30 , 31 ]. All these studies focused on hypothesis generation in solving diagnostic problems. Their results set the groundwork for reasoning in the medical diagnostic process. Their findings regarding the generation of diagnostic hypotheses by experienced and inexperienced clinicians via different processes helped us formulate and narrow our research questions. Their methodology involved performing predefined tasks, recording “think-aloud” sessions, and transcribing and analyzing the study sessions. Making a diagnosis is a critical component of medicine and a routine task for physicians. In contrast, generating scientific hypotheses in clinical research focuses on establishing a scientific hypothesis or doing further searches to explore alternative scientific hypotheses for research. The difference between the 2 can be demonstrated by 2 enterprises. In clinical practice, the goal of generating a diagnostic hypothesis is to make decisions about patient care and the task is time constrained, while in scientific research, time is not similarly constrained and the task is to explore various scientific hypotheses to formulate and refine the final research question. In both making a medical diagnosis in clinical practice and scientific thinking, generating initial hypotheses depends on prior knowledge and experience [ 32 , 33 ]. However, in scientific thinking, analogies and associations play significant roles, in addition to prior knowledge, experience, and reasoning capability. Analogies are widely recognized as playing vital heuristic roles as aids to discovery [ 32 , 34 ], and these have been employed in a wide variety of settings and have had considerable success in generating insights and formulating possible solutions to existing problems.

This makes it essential to understand the scientific hypothesis–generation process in clinical research and to compare this process with generating clinical diagnoses, including the role of experience during the scientific hypothesis–generation process.

This study explores the scientific hypothesis–generation process in clinical research. It investigates whether a secondary data analytics tool and clinician experience influence the scientific hypothesis–generation process. We propose to use direct observations, think-aloud methods with video capture, follow-up inquiries and interview questions, and surveys to capture the participants’ perceptions of the scientific hypothesis–generation process and associated factors. The qualitative data generated will be transcribed, analyzed, and quantified.

We aim to test the following study hypotheses:

Experienced and inexperienced clinical researchers will differ in generating scientific hypotheses guided by secondary data analysis.

Clinical researchers will generate different scientific hypotheses with and without using a secondary data analytics tool.

Researchers’ levels of experience and use of secondary data analytics tools will interact in their scientific hypothesis–generation process.

In this paper, we used the term “research hypothesis” to refer to a statement generated by our research participants, the term “study hypothesis” to refer to the subject of our research study, and the term “scientific hypothesis” to refer to the general term “hypothesis” in research contexts.

This manuscript introduces a study design and uses a mixed methods approach. The study includes assessment of direct observational, utility, and usability study designs. Surveys, interviews, semipredefined tasks, and capturing screen activities are also utilized. The modified Delphi method is also used in the study.

Ethics Approval

The study has been approved by the Institutional Review Board (IRB) of Clemson University, South Carolina (IRB2020-056).

Participants and Recruitment

Experienced and inexperienced clinical researchers, and a panel of clinical research experts, have been recruited for this study. The primary criterion used to distinguish subjects in the 3 groups is the level of their experience in clinical research. Table 1 summarizes the requirements for clinical researchers, expert panel members, and the computers that clinical researchers use during the study sessions. Participants are compensated for their time according to professional organization guidelines.

Summary of the criteria for study participants and clinical research expert panel members.

a If a participant has clinical research experience between 2 and 5 years, the decisive factor for the experienced group will be 5 publications for original studies as the leading author.

To recruit participants, invitational emails and flyers have been sent to collaborators, along with other communication means, including mailing lists, such as those of working groups of the American Medical Informatics Association, South Carolina Clinical & Translational Research Institute newsletters, and PRISMA health research newsletters, and Slack channels, such as National COVID Cohort Collaborative communities. All study sessions are conducted remotely via video conference software (Webex, Cisco) and recorded via a commercial software (BB FlashBack, Blueberry Software).

Introduction to VIADS

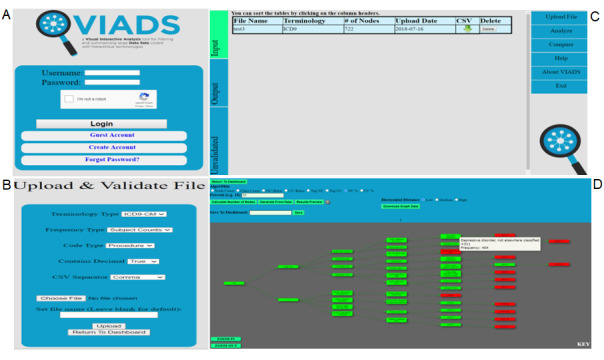

In this study, we have used VIADS as an example of a secondary data analytics tool. VIADS is a visual interactive analytical tool for filtering and summarizing large health data sets coded with hierarchical terminologies [ 22 , 23 , 35 ]. It is a cost-free web-based tool available for research and educational purposes. VIADS can be used by both registered users and guest users without registration. VIADS and the underlying algorithms were developed previously by the authors [ 20 , 21 , 24 ]. VIADS was designed to use codes and usage frequencies from terminologies with hierarchical structures to achieve the following objectives: (1) provide summary visualizations, such as graphs, of data sets; (2) filter data sets to ensure manageable sizes based on user selection of algorithms and thresholds; (3) compare similar data sets and highlight the differences; and (4) provide interactive, customizable, and downloadable features for the graphs generated from the data sets. VIADS is a useful secondary data analytics tool that can facilitate decision-making by medical administrators, clinicians, and clinical researchers. For example, VIADS can be used to track longitudinal data of a hospital over time and can explore trends and detect diagnosis trends and differences over time. VIADS can also be used to compare 2 similar medications and the medical events associated with the medications in order to provide detailed evidence to guide more precise clinical use of the medications [ 20 ]. This study provides evidence of the different information needs of physicians and nurses via the algorithms of VIADS [ 21 ]. Figure 1 shows example screenshots of VIADS.

Selected screenshots of VIADS. (A) Homepage; (B) validation module; (C) dashboard; (D) a graph coded using International Classification of Diseases, 9th Revision-Clinical Modification (ICD9-CM) codes and generated by VIADS.

Meanwhile, we recognize that VIADS can only accept coded clinical data and their associated use frequencies. Currently, VIADS can accept data sets coded using ICD9-CM, ICD10-CM, and MeSH. This can limit the types of scientific hypotheses generated by VIADS.

Preparation of Test Data Sets

We have prepared and used the same data sets for this study across different groups in order to reduce the potential biases introduced by different data sets. However, all data sets (ie, input files) used in VIADS are generally prepared by users within specific institutions. Table 2 summarizes the final format of data sets and the minimum acceptable sizes of data sets needed for analysis in VIADS. The current version of VIADS is designed to accept all data sets coded by the 3 types of terminologies (ICD9-CM, ICD10-CM, and MeSH) listed in Table 2 . No identified patient information is included in the data sets used in VIADS, as the data sets contain only the node identification (ie, terminology code) and usage frequencies.

Acceptable formats and data set sizes in VIADS.

a Acceptable data set sizes for Web VIADS are as follows: patient counts ≥100 and event counts ≥1000.

b ICD9-CM: International Classification of Diseases, 9th Revision-Clinical Modification.

c There are many more codes in addition to the 2 examples provided.

d ICD10-CM: International Classification of Diseases, 10th Revision-Clinical Modification.

e MeSH: Medical Subject Headings.

The usage frequencies of the data sets used in VIADS can be either of the following: patient counts (the number of patients associated with specific ICD codes in the selected database) or event counts (the number of events [ICD codes or MeSH terms] in the selected database).

An ancestor-descendant table, which contains 1 row for each node and each of its distinct descendants, can calculate class counts easier and more accurately without counting the same node multiple times. These implementation details have been discussed in greater detail in prior publications [ 20 , 21 ].

A publicly accessible data source [ 36 ], including ICD9-CM codes, has been used to generate the needed input data sets. The patient counts are used as frequencies. Although ICD10-CM codes are now used in the United States, ICD9-CM data spanning the past several decades are available in most institutions across the country. Therefore, ICD9-CM codes have been used to obtain historical and longitudinal perspectives.

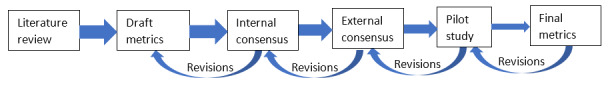

Instrument Development

Metrics have been developed to assess research hypotheses generated during the study sessions. The development process goes through iterative stages ( Figure 2 ) via Qualtrics surveys, emails, and phone calls. First, a literature review is conducted to outline draft metrics. Then, the draft metrics are discussed and iteratively revised until all concerns are addressed. Next, the revised metrics are distributed to the entire research team for feedback. The internal consensus processes follow a modified Delphi method [ 37 ]. Modifications at this point primarily include transparent and open discussions conducted via email among the research team and anonymous survey responses received before and after discussions. The main difference between our modification and the traditional Delphi method is the transparent discussion among the whole team via emails between the rounds of surveys.

Development process for metrics to evaluate research hypotheses in clinical research.

The performance of scientific hypothesis–generation tasks will be measured using metrics that include the following qualitative and quantitative measures: validity, significance, clinical relevance, feasibility, clarity, testability, ethicality, number of total scientific hypotheses, and average time used to generate 1 scientific hypothesis. In an online survey, the panel of clinical research experts will assess the generated research hypotheses based on the metrics we have developed.

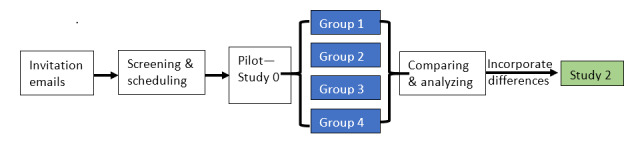

A survey ( Multimedia Appendix 1 ) is administered for the first 4 groups at the end of the research hypothesis–generation study sessions ( Figure 3 ). The groups that use VIADS also complete a modified System Usability Scale (SUS) questionnaire ( Multimedia Appendix 2 ) evaluating the usability and utility of VIADS. The follow-up or inquiry questions and parameters generated during the think-aloud process used in Study 1 ( Figure 3 ) are as follows:

Summary of the study procedures. Blue boxes indicate data collected in Study 1.

What are the needed but currently unavailable attributes that would help clinical researchers generate research hypotheses? The question is similar to a wish list of features facilitating research hypothesis generation.

Follow-up questions clarify potential confusion during the think-aloud processes or enable meaningful inquiries when unexpected or novel questions emerge during observations [ 38 ]. However, these questions have been kept to a minimal level to avoid interrupting clinical researchers’ thinking processes. This method complements the data from the think-aloud video captures.

Can the list of items in Multimedia Appendix 3 , which has been compiled from traditional clinical research textbooks [ 1 - 3 , 8 , 39 ] on scientific hypothesis generation and research question formulation, facilitate clinical researchers’ research hypothesis generation when guided by secondary data analysis?

A survey will be developed based on the comparative results of the first 4 groups and administered at the end of Study 2. The identified differences from Study 1 will be the focus of the survey to determine whether these differences are helpful in Study 2.

Study Design

Study 1 tests all 3 study hypotheses. If the study hypotheses are supported in Study 1, we will conduct a follow-up study (Study 2) to examine whether and how the efficiency or quality of research hypothesis generation may be improved or refined.

We will use the think-aloud method to conduct the following tasks: (1) observe the research hypothesis–generation process; (2) transcribe data, and analyze and assess if VIADS [ 22 ] and different levels of experience of clinical researchers influence the process; and (3) assess the interactions between VIADS (as an example of a secondary analytical tool) and the experience levels of the participants during the process. Figure 3 summarizes the Study 1 and Study 2 procedures.

Experienced and inexperienced clinical researchers conduct research hypothesis–generation tasks using the same secondary data sets. The tasks are captured to describe participants’ research hypothesis–generation processes when guided by analysis of the same data sets. VIADS is also used as an example of a secondary data analytics tool to assess participants’ research hypothesis–generation processes. Accordingly, 2 control groups do not use VIADS, while 2 intervention groups do use it.

A pilot study, Study 0, was conducted in July and August 2021 for each group to assess the feasibility of using the task flow, data sets, screen capture, audio and video recordings, and study scripts. In Study 1, 4 groups are utilized. Table 3 summarizes the study design and participants in each group. The 4 groups will be compared in order to detect the primary effects of the 2 factors and their interactions after completing Study 1. After recruitment, the participants are separated into experienced or inexperienced groups based on predetermined criteria. Then, the participants are randomly assigned to a group that uses VIADS (3-hour session, with 1 hour to conduct VIADS training) or does not use VIADS (2-hour session) via block randomization.

Design of Study 1 for assessment of the hypothesis–generation process in clinical research.

We conduct 1 study session individually with each participant. The participants are given the same data sets from which to generate research hypotheses with or without VIADS during each study session. The same researcher observes the entire process and captures the process via think-aloud video recordings. Follow-up or inquiry questions from the observing researcher are used complementarily during each study session. The study sessions are conducted remotely via Webex. All screen activities are captured and recorded via BB FlashBack [ 40 ].

If the results of Study 1 indicate group differences as expected (in particular, differences are found between experienced and inexperienced clinical researchers, along with differences between groups using VIADS and not using VIADS), Study 2 will be conducted to examine whether the efficiency of the research hypothesis–generation process could be improved. Specifically, we will analyze for group differences, identify anything related to VIADS, and incorporate them into VIADS. Then, we will test whether the revised and presumably improved VIADS increases the efficiency or quality of the research hypothesis–generation process. In this process, the group that has the lowest performance in Study 1 will be invited to use the revised VIADS to conduct research hypothesis–generation tasks again with the same data sets. However, at least 8 months will be allowed to pass in order to provide an adequate wash-out period. This group’s performance will be compared with that in Study 1.

If no significant difference can be detected between the groups using VIADS and not using VIADS in Study 1, we will use the usability and utility survey results to revise VIADS accordingly without conducting Study 2. If no significant difference can be detected between experienced and inexperienced clinical researchers, Study 2 will recruit both experienced and inexperienced clinical researchers as study participants. In this case, Study 2 will focus only on whether a revised version of VIADS impacts the research hypothesis–generation process and outcomes.

Data and Statistical Analysis

While conducting the given tasks, the qualitative data collected via the think-aloud method will be transcribed, coded, and analyzed according to the grounded theory [ 41 , 42 ], a classical qualitative data analysis method. This data analysis has not begun yet because data are still being collected. Combined analysis of discourse [ 30 ], video recordings of the study sessions, and screen activities will be conducted. The main components or patterns that we will focus on during analysis include potential nonverbal steps, sequential ordering among different components (such as prioritization of the use of either experience or data) across groups, seeking and processing evidence, analyzing data, generating inferences, making connections, formulating a hypothesis, searching for information needed to generate research hypotheses, and so forth. Ideally, based on video analyses and observations, we plan to develop a framework for the scientific hypothesis–generation process in clinical research, which is guided by secondary data analytics. Similar frameworks exist in education and learning areas [ 43 ], but do not currently exist in the field of clinical research.

The outcome variable used is based on the participants’ performance in research hypothesis–generation tasks. The performance is measured by the quality (eg, significance and validity) and quantity of the research hypotheses generated through the tasks and the average time to generate 1 research hypothesis. At least three clinical research experts will assess each hypothesis using the scientific hypothesis assessment metrics that were developed for this study. The metrics include multiple items, each on a 5-point Likert scale. The details of the metrics are described in the Instrument Development section.

The data will be analyzed with a 2-tailed factorial analysis. We calculated the required sample size in G*Power 3.1.9.7 for a 2-way ANOVA. The sample size was 32 based on a confidence level of 95% (α=.05), effect size f=0.5, and power level of 0.8 (β=.20).

In Study 1, we will use descriptive statistics to report how many hypotheses were generated, average time spent per hypothesis, and how many hypotheses were evaluated for each participant. A 2-way ANOVA will be used to examine the main effects of VIADS and experience, as well as the interaction effect of the 2 factors. In the ANOVA, the outcome variable is the expert evaluation score. The follow-up survey data will be analyzed using correlations to examine the relationship between participants’ self-rated creativity, the average time per hypothesis generation, the number of hypotheses generated, and the expert evaluation score. The SUS will be used to assess the usability of VIADS. Qualitative data from the SUS surveys will be used to guide revisions of VIADS after Study 1. Descriptive statistics will also be used to report the answers to other follow-up questions.

A t test will be conducted to determine whether the revised VIADS improves the performance of research hypothesis generation in Study 2.

The study is a National Institutes of Health–funded R15 (Research Enhancement Award) project supported by the National Library of Medicine. We began collecting data in July 2021 via pilot studies, and here provide some preliminary results and summarize our early observations. The full results and analysis of the study will be shared in future publications when we complete the study.

Instruments

Based on a literature review, metrics were developed to assess research hypotheses [ 1 , 2 , 4 , 6 - 9 , 39 ]. Most of the dimensions used to evaluate clinical research hypotheses include clinical and scientific validity ; significance (regarding the target population, cost, and future impact); novelty (regarding new knowledge, impact on practice, and new methodology); clinical relevance (regarding medical knowledge, clinical practice, and policies); potential benefits and risks ; ethicality ; feasibility (regarding cost, time, and the scope of the work); testability ; clarity (regarding purpose, focused groups, variables, and their relationships); and researcher interest level (ie, willingness to pursue).

Multiple items were used to measure the quality for each dimension mentioned above. For each item, a 5-point Likert scale (1=strongly disagree, 5=strongly agree) was used for measurement. After internal consensus, we conducted external consensus and sought feedback from the external expert panel via an online survey [ 44 ]. The metrics are revised continuously by incorporating feedback. The expert panel will use our online survey [ 45 ] to evaluate research hypotheses generated by research participants during the study sessions.

We have developed the initial study scripts for Study 1 and have revised them after the pilot study sessions (Study 0). We have developed the screening survey for the recruitment process. The follow-up survey is administered after each study session, regardless of the group. The standard SUS survey [ 46 , 47 ] has been modified to add one more option in order to allow users to elaborate on what caused any dissatisfaction during the usability study.

Recruitment

Currently, we are recruiting all levels of participants, including inexperienced clinical researchers, experienced clinical researchers, and a panel of clinical research experts. Recruitment began in July 2021 with pilot study participants. To participate, anyone involved in clinical research can share their contact email address by filling out the screening survey [ 48 ]. So far, we have completed 16 study sessions with inexperienced clinical researchers who have either used or not used VIADS in Study 1.

For this study, we are using data from the National Ambulatory Medical Care Survey (NAMCS) conducted by the Centers for Disease Control and Prevention [ 36 ]. The NAMCS is a publicly accessible data set of survey results related to clinical encounters in ambulatory settings. We processed raw NAMCS data (ICD9 codes and accumulated frequencies) from 2005 and 2015 to prepare the needed data sets for VIADS based on our requirements.

The experience level of the clinical researchers was determined by predetermined criteria. To determine which group a participant joins (inexperienced [groups 2 and 4] or experienced [groups 1 and 3] clinical researchers), we used the R statistical software package (blockrand [ 49 ]) to implement block randomization. The random blocks range from 2 to 6 participants.

Initial Observations

We have noticed that both forward [ 27 ] and backward reasoning had been used by participants during the study sessions. In addition, some participants did not start from data or a hypothesis. Instead, the reasoning started from the participant’s focused (and often familiar) area of knowledge related to several ICD9 codes in the focus area being examined. The research hypotheses were then developed after examining the data on the focused area.

Many participants did not use any advanced analysis during the study sessions. However, they did use their prior experience and knowledge to generate research hypotheses based on the frequency rank of the provided data sets and by comparing the 2 years of data (ie, 2005 vs 2015).

Noticeably, VIADS can answer more complicated questions both systematically and more rapidly. However, we noticed that the training session required to enable use of VIADS increased participants’ cognitive load. Cognitive load refers to the amount of working memory resources required during the task of thinking and reasoning. Without a comprehensive analysis, we cannot yet draw further conclusions about the potential effects of this cognitive load.

Significance of the Study

A critical first step in the life cycle of any scientific research study is formulating a valid and significant research question, which can usually be divided into several scientific hypotheses. This process is often challenging and time-consuming [ 1 , 3 , 38 , 50 ]. Currently, there is limited practical guidance regarding generating research questions [ 38 ] beyond emphasizing that it requires long-term experience, observation, discussion, and exploration of the literature. A scientific hypothesis–generation process will eventually help to formulate relevant research questions. Our study aims to decipher the process of scientific hypothesis generation and determine whether a secondary data analytics tool can facilitate the process in a clinical research context. When combined with clinical researchers’ experiences and observations, such tools can be anticipated to facilitate scientific hypothesis generation. This facilitation will improve the efficiency and accuracy of scientific hypothesis testing, formulating research questions, and conducting clinical research in general. We also anticipate that an explicit description of the scientific hypothesis–generation process with secondary data analysis may provide more feasible guidance for clinical research design newcomers (eg, medical students and new clinical investigators). However, we have not completed all study sessions, so we cannot yet analyze the collected data in order to draw meaningful conclusions.

Interpretation of the Study and Results

Participants have been observed to use analogical reasoning [ 51 ] both consciously and subconsciously; meanwhile, some participants verbally expressed that they avoided analogical reasoning intentionally to be more creative during the study sessions. The participants intentionally did not use the same pattern of statements for all the topics supported by the data sets. The way we organized the data sets seems to promote the participants to think systematically when using the data sets. For instance, the use frequencies of ICD9 codes were sorted from high to low in each data set. However, what would constitute the perfect balance between systematic structure and randomness during scientific hypothesis generation is unknown. Intuitively, both systematic reviews and random connections should be critical in generating novel ideas in general, regardless of academic settings or industrial environments. Concrete evidence is needed to draw any conclusions about the relationships between the 2 during scientific hypothesis generation with certainty. Additionally, the current version of VIADS can only accept coded data using ICD9-CM, ICD10-CM, and MeSH. This inevitably limits the types of hypotheses VIADS can generate. We also recognize that other more broadly used hierarchical terminologies, such as SNOMED CT, RxNorm, and LOINC, could provide additional valuable information related to more comprehensive aspects of clinical care. However, our current version of VIADS cannot use such information at this time.

Analyzing the research hypothesis–generation process may include several initial cognitive components. These components can consist of searching for, obtaining, compiling, and processing evidence; seeking help to analyze data; developing inferences using obtained evidence and prior knowledge; searching for external evidence, including literature or prior notes; seeking connections between evidence and problems; considering feasibility, testability, ethicality, and clarity; drawing conclusions; formulating draft research hypotheses; and polishing draft research hypotheses [ 1 - 3 , 8 , 39 , 52 ]. These initial components will be used to code the recorded think-aloud sessions to compare differences among groups.

We recognize that research hypothesis generation and the long refining and improving process matter most during the study sessions. Without technologies to capture what occurs cognitively during the research hypothesis–generation process, we may not be able to answer fundamental questions regarding the mechanisms of scientific hypothesis generation.

Establishing the evaluation metrics to assess research hypotheses is the first step and the critical foundation of the overall study. The evaluation metrics used will determine the quality measurements of the research hypotheses generated by study participants during the study sessions.

Research hypothesis evaluation is subjective, but metrics can help standardize the process to some extent. Although the metrics may not guarantee a precise or perfectly objective evaluation of each research hypothesis, such metrics provide a consistent instrument for this highly sophisticated cognitive process. We anticipate that a consistent instrument will help to standardize the expert panel’s evaluations. Additionally, objective measures, such as the number of research hypotheses generated by the study participants and the average time each participant spends generating each research hypothesis, will be used in the study. The expert panel is therefore expected to provide more consistent research hypothesis evaluations with the combined metrics and objective measures.

Although developing metrics appears linear, as presented in Figure 2 , the process itself is highly iterative. No revision occurs only once, and when we reflect on the first 3 stages of development, one observes that major revisions during the first 3 stages involve separating questions in the survey and refining the options for the questions. These steps reduce ambiguity.

Many challenges have been encountered while conducting the research hypothesis–generation study sessions. These include the following:

What can be considered a research hypothesis? What will not be considered a research hypothesis? The response will determine which research hypotheses will be evaluated by the panel of clinical research experts.

How should the research hypothesis be measured accurately? Although we developed workable metrics, the metrics are not yet perfect.

How can we accurately capture thinking, reasoning, and networking processes during the research hypothesis–generation process? Currently, we use the think-aloud method. Although think-aloud protocols can capture valuable information about the thinking process, we recognize that not all processes can be articulated during the experiments, and not everyone can articulate their processes accurately or effectively.

What happens when a clinical researcher examines and analyzes a data set and generates a research hypothesis subconsciously?

How can we capture the roles of the external environment, internal cognitive capacity, existing knowledge base of the participant, and interactions between the individual and the external world in these dynamic processes?

When faced with challenges, we see opportunities for researchers to further explore and identify a clearer picture of research hypothesis generation in clinical research. We believe that the most pressing target is developing new technologies in order to capture what clinical researchers do and think when generating research hypotheses from a data set. Such technologies can promote breakthroughs in cognition, psychology, computer science, artificial intelligence, neurology, and clinical research in general. In clinical research, such technologies can help empower clinical researchers to conduct their tasks more efficiently and effectively.

Lessons Learned

We learned some important lessons while designing and conducting this study. The first lesson involved balancing the study design (straightforward or complicated) and conducting the study (feasibility). During the design stage, we were concerned that the 2×2 study design was too simple, even though we know it does not negatively impact the value of the research. We simply considered experience levels and whether the participants used VIADS in a very complicated cognitive process. However, even for such a straightforward design, only 1 experienced clinical researcher has volunteered so far. Thus, we will first focus on inexperienced clinical researchers. Even for study sessions involving inexperienced clinical researchers, considerable time is needed to determine strategies for coding and analyzing the raw data. In order to design a complicated experiment that answers more complex questions, we must consider balancing practical workload, recruitment reality, expected timeline, and researchers’ desire to pursue a complex research question.

Recruitment is always challenging. Many of our panel invitations to clinical research experts either received no response or were rejected, which significantly delayed the study timeline, in addition to the effects of the COVID-19 pandemic. Furthermore, the IRB approval process was time-consuming, delaying our study when we needed to revise study documents. Therefore, the study timeline includes the IRB initial review and rereview cycles.

Future Work

The first step of a future direction for this project is to explore the feasibility of formulating research questions based on research hypotheses. In this project, we are looking for ways to improve the efficiency of generating research hypotheses. The next step will be to explore whether we can enhance the efficiency of formulating research questions.

A possible direction for future work is to develop tools to facilitate scientific hypothesis generation guided by secondary data analysis. We may explore automating the process or incorporating all positive attributes in order to guide the process better and improve efficiency and quality.

At the end of our experiments, we asked clinical researchers what facilitates their scientific hypothesis–generation process the most. Several of their responses included repeatedly reading academic literature and discussing with colleagues. We believe intelligent tools can undoubtedly improve both aspects of scientific hypothesis generation, namely, summarizing new publications of the chosen topic areas and providing conversational support to clinical researchers. This would be a natural extension of our studies.

An additional possible direction is to expand the terminologies that can be used by VIADS, for example, the addition of RxNorm, LOINC, and SNOMED CT can be considered in the future.

Acknowledgments

The project is supported by a grant from the National Library of Medicine of the United States National Institutes of Health (R15LM012941), and is partially supported by the National Institute of General Medical Sciences of the National Institutes of Health (P20 GM121342). The content is solely the authors’ responsibility and does not necessarily represent the official views of the National Institutes of Health.

Abbreviations

International Classification of Diseases

International Classification of Diseases, 9th Revision-Clinical Modification

International Classification of Diseases, 10th Revision-Clinical Modification

Institutional Review Board

Logical Observation Identifiers Names and Codes

Medical Subject Headings

National Ambulatory Medical Care Survey

Systematized Nomenclature of Medicine-Clinical Terms

System Usability Scale

Follow-up survey at the end of the hypothesis-generation tasks.

VIADS System Usability Scale survey and utility questionnaire.

List of items to consider during hypothesis generation.

Data Availability

A request for aggregated and anonymized transcription data can be made to the corresponding author, and the final decision on data release will be made on a case-by-case basis, as appropriate. The complete analysis and the results will be published in future manuscripts.

Conflicts of Interest: None declared.

- 1. Supino PG, Borer JS. Principles of Research Methodology A Guide for Clinical Investigators. Berlin/Heidelberg, Germany: Springer; 2012. [ Google Scholar ]

- 2. Parahoo K. Nursing Research: Principles, Process and Issues. London, United Kingdom: Palgrave MacMillan Ltd; 1997. [ Google Scholar ]

- 3. Hulley SB, Cummings SR, Browner WS, Grady D, Newman TB. Designing clinical research. Philadelphia, PA: Wolters Kluwer; 2013. [ Google Scholar ]

- 4. Biesecker LG. Hypothesis-generating research and predictive medicine. Genome Res. 2013 Jul 01;23(7):1051–3. doi: 10.1101/gr.157826.113. http://genome.cshlp.org/cgi/pmidlookup?view=long&pmid=23817045 .23/7/1051 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 5. Brian Haynes R. Forming research questions. J Clin Epidemiol. 2006 Sep;59(9):881–6. doi: 10.1016/j.jclinepi.2006.06.006. http://europepmc.org/abstract/MED/16895808 .S0895-4356(06)00233-2 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 6. Farrugia P, Petrisor B, Farrokhyar F, Bhandari M. Practical tips for surgical research: Research questions, hypotheses and objectives. Can J Surg. 2010 Aug;53(4):278–81. https://www.canjsurg.ca/lookup/pmidlookup?view=long&pmid=20646403 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 7. Hicks C. Research Methods for Clinical Therapists: Applied Project Design and Analysis. London, United Kingdom: Churchill Livingstone; 1999. [ DOI ] [ PubMed ] [ Google Scholar ]

- 8. Glasser SP. Essentials of Clinical Research. Cham: Springer; 2014. [ Google Scholar ]

- 9. Spangler S. Accelerating Discovery Mining Unstructured Information for Hypothesis Generation. New York, NY: Chapman and Hall/CRC; 2016. [ Google Scholar ]

- 10. Cheng HG, Phillips MR. Secondary analysis of existing data: opportunities and implementation. Shanghai Arch Psychiatry. 2014 Dec;26(6):371–5. doi: 10.11919/j.issn.1002-0829.214171. http://europepmc.org/abstract/MED/25642115 .sap-26-06-371 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 11. Office-based Physician Electronic Health Record Adoption. Health IT. 2019. [2022-06-27]. https://www.healthit.gov/data/quickstats/office-based-physician-electronic-health-record-adoption .

- 12. Adoption of Electronic Health Records by Hospital Service Type 2019-2021. Health IT. 2022. [2022-06-27]. https://www.healthit.gov/data/quickstats/adoption-electronic-health-records-hospital-service-type-2019-2021 .

- 13. International Classification of Diseases, Ninth Revision, Clinical Modification (ICD-9-CM) Centers for Diseases Control and Prevention. [2022-06-27]. https://www.cdc.gov/nchs/icd/icd9cm.htm .

- 14. International Classification of Diseases, Tenth Revision, Clinical Modification (ICD-10-CM) Centers for Diseases Control and Prevention. [2022-06-27]. https://www.cdc.gov/nchs/icd/icd-10-cm.htm .

- 15. El-Sappagh S, Franda F, Ali F, Kwak K. SNOMED CT standard ontology based on the ontology for general medical science. BMC Med Inform Decis Mak. 2018 Aug 31;18(1):76. doi: 10.1186/s12911-018-0651-5. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-018-0651-5 .10.1186/s12911-018-0651-5 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 16. Vreeman DJ, McDonald CJ, Huff SM. LOINC®: a universal catalogue of individual clinical observations and uniform representation of enumerated collections. IJFIPM. 2010;3(4):273. doi: 10.1504/ijfipm.2010.040211. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 17. Liu S, Wei M, Moore R, Ganesan V, Nelson S. RxNorm: prescription for electronic drug information exchange. IT Prof. 2005 Sep;7(5):17–23. doi: 10.1109/mitp.2005.122. [ DOI ] [ Google Scholar ]

- 18. Gene Ontology. [2022-06-27]. http://geneontology.org/

- 19. Medical Subject Headings: MeSH. National Library of Medicine. [2022-06-27]. https://www.nlm.nih.gov/mesh/meshhome.html .

- 20. Jing X, Cimino JJ. A complementary graphical method for reducing and analyzing large data sets. Methods Inf Med. 2018 Jan 20;53(03):173–185. doi: 10.3414/me13-01-0075. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 21. Jing X, Cimino JJ. Graphical methods for reducing, visualizing and analyzing large data sets using hierarchical terminologies. AMIA Annu Symp Proc. 2011;2011:635–43. http://europepmc.org/abstract/MED/22195119 . [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 22. Jing X, Emerson M, Masters D, Brooks M, Buskirk J, Abukamail N, Liu C, Cimino JJ, Shubrook J, De Lacalle S, Zhou Y, Patel VL. A visual interactive analytic tool for filtering and summarizing large health data sets coded with hierarchical terminologies (VIADS) BMC Med Inform Decis Mak. 2019 Feb 14;19(1):31. doi: 10.1186/s12911-019-0750-y. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-019-0750-y .10.1186/s12911-019-0750-y [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 23. Emerson M, Brooks M, Masters D, Buskirk J, Abukamail N, Liu C, Cimino J, Shubrook J, Jing X. Improved visualization of hierarchical datasets with VIADS. AMIA Annual Symposium; November 3-7, 2018; San Francisco, CA. 2018. [ Google Scholar ]

- 24. Levine M, Osei D, Cimino JJ, Liu C, Phillips BO, Shubrook JH, Jing X. Performance comparison between two solutions for filtering data sets with hierarchical structures. J Comput Eng Inf Technol. 2016 Jul 23;05(s1):f4147. doi: 10.1136/bmj.f4147.[published. doi: 10.1136/bmj.f4147.[published. [ DOI ] [ Google Scholar ]

- 25. Levine M, Osei D, Cimino JJ, Liu C, Phillips BO, Shubrook JH, Jing X. Performance comparison between two solutions for filtering data sets with hierarchical structures. 8th Annual Mid-Atlantic Healthcare Informatics Symposium; October 23, 2015; Philadelphia, PA. 2015. [ Google Scholar ]

- 26. Arocha JF, Patel VL, Patel YC. Hypothesis generation and the coordination of theory and evidence in novice diagnostic reasoning. Med Decis Making. 2016 Jul 01;13(3):198–211. doi: 10.1177/0272989x9301300305. [ DOI ] [ PubMed ] [ Google Scholar ]

- 27. Patel VL, Groen GJ, Arocha JF. Medical expertise as a function of task difficulty. Mem Cognit. 1990 Jul;18(4):394–406. doi: 10.3758/bf03197128. [ DOI ] [ PubMed ] [ Google Scholar ]

- 28. Patel VL, Groen CJ, Patel YC. Cognitive aspects of clinical performance during patient workup: the role of medical expertise. Adv Health Sci Educ Theory Pract. 1997;2(2):95–114. doi: 10.1023/A:1009788531273.146632 [ DOI ] [ PubMed ] [ Google Scholar ]

- 29. Patel VL, Groen GJ. Knowledge based solution strategies in medical reasoning. Cognitive Sci. 1986;10(1):91–116. doi: 10.1207/s15516709cog1001_4. [ DOI ] [ Google Scholar ]

- 30. Kushniruk A, Patel V, Marley A. Small worlds and medical expertise: implications for medical cognition and knowledge engineering. International Journal of Medical Informatics. 1998 May;49(3):255–271. doi: 10.1016/s1386-5056(98)00044-6. [ DOI ] [ PubMed ] [ Google Scholar ]

- 31. Joseph G, Patel VL. Domain knowledge and hypothesis genenation in diagnostic reasoning. Med Decis Making. 2016 Jul 02;10(1):31–44. doi: 10.1177/0272989x9001000107. [ DOI ] [ PubMed ] [ Google Scholar ]

- 32. The Cambridge Handbook of Thinking and Reasoning. Cambridge, United Kingdom: Cambridge University Press; 2005. [ Google Scholar ]

- 33. Patel VL, Arocha JF, Zhang J. Chapter 30: Thinking and Reasoning in Medicine. In: Holyoak KJ, Morrison RG, editors. The Cambridge Handbook of Thinking and Reasoning. Cambridge, United Kingdom: Cambridge University Press; 2005. pp. 727–50. [ Google Scholar ]

- 34. Kaufman DR, Patel VL, Magder SA. The explanatory role of spontaneously generated analogies in reasoning about physiological concepts. International Journal of Science Education. 2007 Feb 25;18(3):369–386. doi: 10.1080/0950069960180309. [ DOI ] [ Google Scholar ]

- 35. Jing X, Emerson M, Gunderson D, Cimino JJ, Liu C, Shubrook J, Phillips B. Architecture of a visual interactive analysis tool for filtering and summarizing large data sets coded with hierarchical terminologies (VIADS) AMIA Summits Transl Sci Proc. 2018:444–45. https://knowledge.amia.org/amia-66728-cri2018-1.4079151/t004-1.4080149/f004-1.4080150/a074-1.4080205/an074-1.4080206?qr=1 . [ Google Scholar ]

- 36. Datasets and Documentation. Centers for Disease Control and Prevention. [2022-06-27]. https://www.cdc.gov/nchs/ahcd/datasets_documentation_related.htm .

- 37. Hohmann E, Cote MP, Brand JC. Research pearls: expert consensus based evidence using the Delphi method. Arthroscopy. 2018 Dec;34(12):3278–3282. doi: 10.1016/j.arthro.2018.10.004.S0749-8063(18)30838-7 [ DOI ] [ PubMed ] [ Google Scholar ]

- 38. Lipowski EE. Developing great research questions. Am J Health Syst Pharm. 2008 Sep 01;65(17):1667–70. doi: 10.1136/bmj.f4147.[published. doi: 10.1136/bmj.f4147.[published.65/17/1667 [ DOI ] [ PubMed ] [ Google Scholar ]

- 39. Gallin JI, Ognibene FP. Principles and Practice of Clinical Research. Cambridge, MA: Academic Press; 2012. [ Google Scholar ]

- 40. FlashBack. [2022-06-27]. https://www.flashbackrecorder.com/

- 41. Oates BJ. Researching Information Systems and Computing. Thousand Oaks, CA: SAGE Publications; 2006. [ Google Scholar ]

- 42. Friedman CP, Wyatt JC, Ash JS. Evaluation Methods in Biomedical and Health Informatics. Cham: Springer; 2022. [ Google Scholar ]

- 43. Moseley D, Baumfield V, Elliott J, Higgins S, Miller J, Newton DP, Gregson M. Frameworks for Thinking: A Handbook for Teaching and Learning. Cambridge, United Kingdom: Cambridge University Press; 2005. [ Google Scholar ]

- 44. VIADS expert feedback on hypothesis evaluation matrix. Qualtrics. [2022-06-27]. https://clemson.ca1.qualtrics.com/jfe/form/SV_9n0zN4LaxXEA669 .

- 45. VIADS hypothesis expert review. Qualtrics. [2022-06-27]. https://clemson.ca1.qualtrics.com/jfe/form/SV_0NvKpJChveqfKTz .